LLMOps: Take Your AI Models to Real Production

MLflow · LangSmith · Kubernetes · Guardrails

87% of ML projects never make it to production. LLMOps is the discipline that closes that gap: deployment, monitoring, evaluation, and scaling of language models with engineering rigor.

What Our LLMOps Service Includes

Everything you need to operate LLMs with confidence.

Real-Time AI Observability

You can't improve what you don't measure.

A model in production without observability is a ticking time bomb. LLMOps instruments every call: p50/p95/p99 latency, tokens consumed, cost per request, response quality with automated evaluations, and hallucination detection. A unified dashboard so your team makes data-driven decisions, not gut calls.

Executive Summary

For CEOs and innovation directors.

The MLOps market is estimated at $2.4-4.4 billion in 2025, with a projection of $56-89B by 2035 (39.8% CAGR). Demand for LLMOps professionals far exceeds supply, making outsourcing to a specialized agency the most efficient decision.

87% of machine learning projects never reach production. Not because of a lack of models, but because of a lack of operational infrastructure. LLMOps turns prototypes into business assets: scalable, monitored, and cost-controlled.

Investing in LLMOps is not an additional cost; it's the insurance that your AI investment generates returns. Without operations, a model that works in a notebook is just an expensive experiment.

Summary for CTO / Technical Team

Stack, architecture, and technical decisions.

Model serving: TrueFoundry or vLLM for high-performance inference. Kubernetes (EKS/GKE) for orchestration. GPU scheduling with NVIDIA Triton or Hugging Face TGI (Text Generation Inference). Autoscaling based on queue depth, not CPU.

Continuous evaluation: Braintrust or LangSmith for eval pipelines. Versioned reference datasets. Regression tests before every deploy. Quality metrics: coherence, factuality, relevance, safety. Human-in-the-loop evaluation for edge cases.

Observability: Traces with LangSmith/Braintrust, metrics with Prometheus/Grafana, structured logs. Drift detection with sliding windows. Cost tracking per model, per endpoint, per client. Alerts in PagerDuty/OpsGenie with automated runbooks.

Is It Right for You?

LLMOps requires you to already have AI models or prototypes. If you're still exploring, start with AI consulting.

Who it's for

- Companies with AI prototypes ready to go to production.

- Teams already using LLMs (GPT-4, Claude, Llama) that need to scale.

- Organizations with multiple models that want to unify operations.

- CTOs who need observability and inference cost control.

- Regulated companies that require audit logging and guardrails (AI Act, GDPR).

Who it's not for

- If you don't yet have a defined AI use case (start with consulting).

- Projects that can be solved with a vanilla OpenAI API call with no customization.

- Companies without budget for GPU infrastructure.

- Teams without minimum technical capacity to operate pipelines.

- If you're looking for "an AI that does everything on its own" -- models require oversight.

LLMOps Services

Operational verticals for AI in production.

Model Deployment & Serving

Model containerization, deployment on Kubernetes with GPU scheduling, demand-based autoscaling. Blue-green deployments for zero-downtime updates.

Prompt Engineering as Code

Prompts versioned in Git, evaluated against reference datasets, deployed with CI/CD. A/B testing of prompts to optimize quality and cost simultaneously.

Evaluation and Quality Assurance

Automated evaluation pipelines: factuality, coherence, safety, hallucinations. Human-in-the-loop to calibrate automated evaluators. Quality reports before every release.

Observability & Monitoring

End-to-end traces for every request. Latency, throughput, quality, and cost metrics. Drift detection and performance degradation alerts. Executive and technical dashboards.

FinOps for AI

Cost tracking per request, per model, per client. Inference caching, intelligent batching, model selection by cost/quality. Typical inference cost reduction of 30-60%.

AgentOps & Agentic Systems

Monitoring of multi-step agents: decision traceability, tool control, circuit breakers, and timeouts. The future of LLMOps is operating agents, not just models.

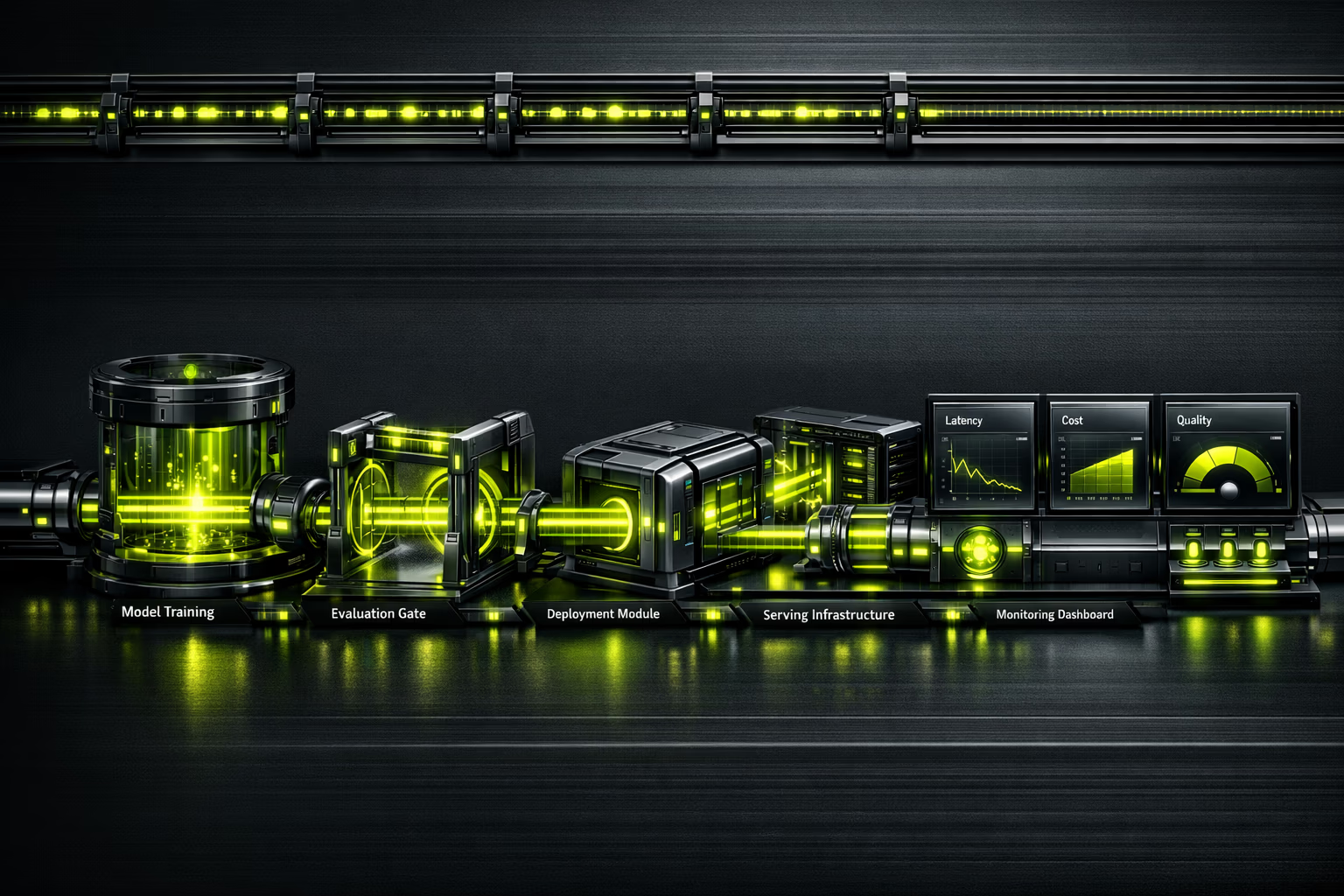

Implementation Process

From prototype to production with guarantees.

Assessment and Design

We evaluate your current models, infrastructure, and production requirements. We design the serving, monitoring, and evaluation architecture. We define SLOs (latency, quality, availability).

CI/CD Pipeline for ML

We configure build, test, and deploy pipelines. Prompt versioning in Git. Curated eval datasets. Automated regression tests with quality thresholds.

Deployment and Observability

Model on Kubernetes with GPU scheduling. Full instrumentation: traces, metrics, logs. Grafana dashboards. Alerts configured with runbooks.

Guardrails and Optimization

Security filters, output validation, rate limiting. Cost optimization: caching, batching, right-sizing. Documentation and knowledge transfer.

Operation and Continuous Improvement

24/7 monitoring. Re-evaluation cycles with production data. A/B testing of models and prompts. Monthly performance and cost reports.

Risks and Mitigation

Operating LLMs carries specific risks. Here's how we manage them.

Hallucinations in production

Guardrails with output validation, RAG for grounding, continuous factuality evaluation. Target rate: <2% critical hallucinations.

Uncontrolled inference costs

FinOps from day 1: per-request tracking, intelligent caching, model routing (cheap model for simple queries, powerful for complex ones). Typical savings: 30-60%.

Silent quality degradation

Eval pipelines with sliding windows detect degradation before users report it. Automatic rollback if quality drops below threshold.

Vendor lock-in with an AI provider

Abstraction layer that allows switching between OpenAI, Anthropic, and open-source models without rewriting the application. Periodic comparative evaluation.

Regulatory non-compliance (AI Act)

Audit logging of every interaction, content guardrails, model decision documentation. Prepared for risk classification under the AI Act.

From Notebook to Production in 6 Weeks

B2C e-commerce with an AI chatbot prototype stuck in a Jupyter notebook. 12-second response latency, no monitoring, unpredictable API costs. We implemented full LLMOps: serving on Kubernetes, caching of frequent responses, model routing by complexity, and content guardrails.

The LLMOps Talent Gap

The outsourcing opportunity.

Demand for LLMOps/MLOps professionals exceeds supply at a 5:1 ratio. Building an internal AI operations team requires ML engineer, platform engineer, and SRE profiles -- salaries totaling EUR 300K+/year. Outsourcing to Kiwop gives you the same expertise without the fixed cost or turnover risk.

Frequently Asked Questions About LLMOps

What decision-makers ask before investing in AI operations.

What's the difference between MLOps and LLMOps?

MLOps is the general discipline for machine learning operations: training pipelines, serving, monitoring. LLMOps extends MLOps with practices specific to language models: prompt versioning, non-deterministic quality evaluation, hallucination control, and token cost optimization.

Do I need LLMOps if I only use the OpenAI API?

Yes. Using an API doesn't eliminate the need for operations: you need to monitor costs, detect quality degradation, manage prompts as code, implement fallbacks when the API goes down, and comply with regulations. LLMOps becomes more critical the more you depend on AI.

How much does LLM inference cost in production?

It depends on volume and model. GPT-4o: ~$2.5 per million input tokens. Claude Sonnet: ~$3. Open-source models (Llama 3): ~$0.2 with your own infrastructure. Typical optimization reduces costs by 30-60% with caching, batching, and model routing.

What is "AgentOps"?

AgentOps is the evolution of LLMOps for agentic systems: models that use tools, make multi-step decisions, and collaborate with each other. It requires decision traceability, circuit breakers, tool control, and timeouts. It is the future of AI operations.

How do you evaluate LLM quality in production?

With automated evaluation pipelines that measure: factuality (does it state truths?), coherence (does it make sense?), relevance (does it answer the question?), and safety (does it generate harmful content?). Complemented with periodic human evaluation to calibrate automated evaluators.

How long does it take to implement LLMOps?

Basic pipeline (serving + monitoring): 4-6 weeks. Complete pipeline (eval, guardrails, FinOps, CI/CD): 8-12 weeks. Depends on model complexity and existing infrastructure.

Can we use open-source models instead of commercial APIs?

Absolutely. Llama 3, Mistral, and Qwen are viable alternatives for many use cases. The advantage: predictable cost, no third-party dependency, data stays on your infrastructure. The trade-off: you need GPUs and expertise to operate. We evaluate the best option for each case.

How does the European AI Act affect AI operations?

The AI Act classifies systems by risk. For high-risk systems: mandatory audit logging, technical documentation, transparency, and human oversight. Well-implemented LLMOps covers these requirements by design: complete traces, documented guardrails, and logs of every interaction.

Are Your AI Models Working in a Notebook but Not in Production?

87% of ML projects stall at experimentation. We take your AI to production with observability, guardrails, and controlled costs.

Talk to an ML engineer Technical

Initial Audit.

AI, security and performance. Diagnosis with phased proposal.

Your first meeting is with a Solutions Architect, not a salesperson.

Request diagnosis