Enterprise RAG: AI That Answers With Your Real Data

Your company has thousands of documents, manuals, and databases that nobody queries efficiently. RAG (Retrieval-Augmented Generation) connects your data with generative AI for precise, cited, and verifiable answers. A $1.96B market in 2025, projected to reach $40.3B by 2035.

Service Deliverables

What you get in a complete RAG system.

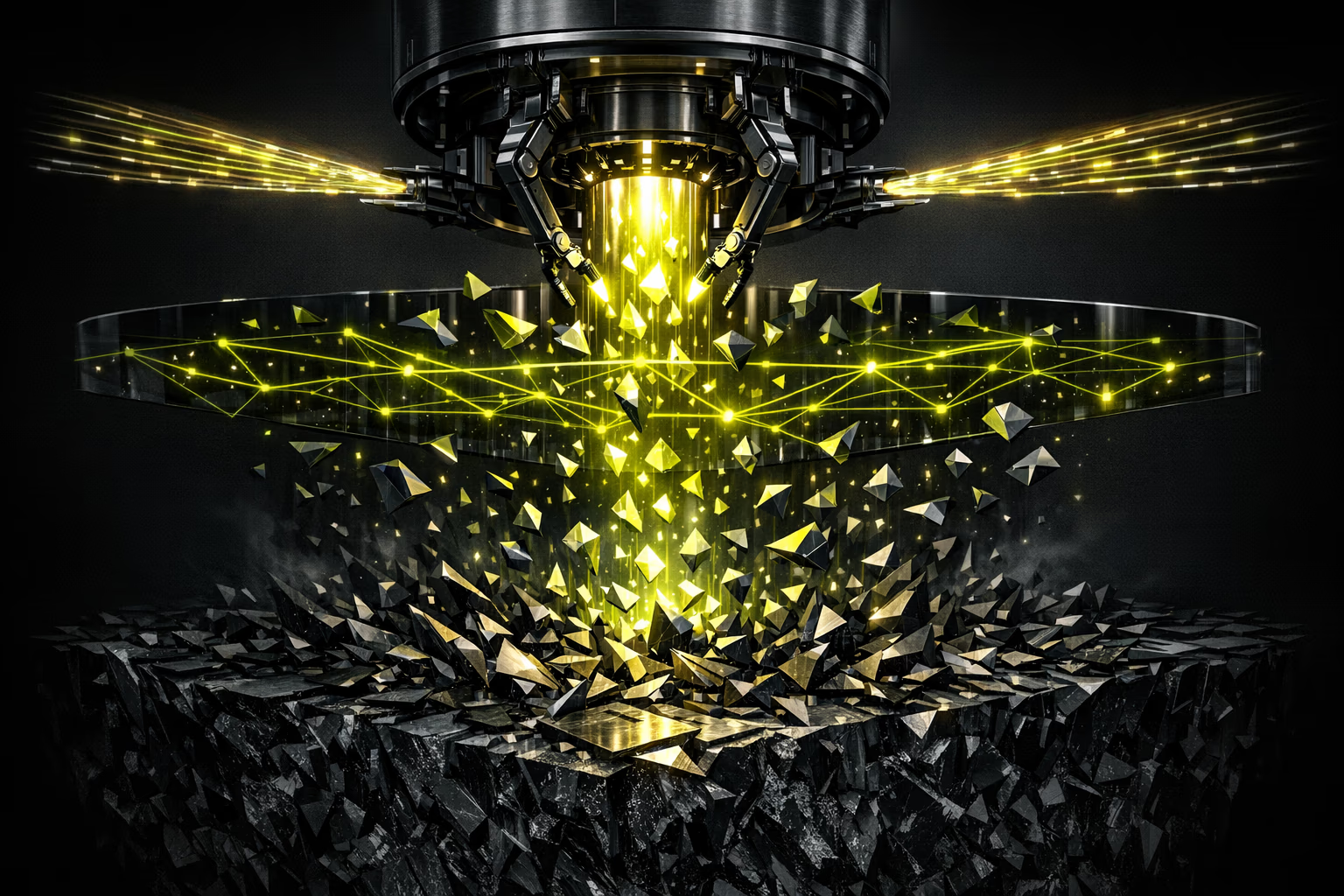

How a RAG System Works

The architecture that eliminates hallucinations.

RAG combines the best of both worlds: the natural language capabilities of LLMs with the precision of your actual data. When a user asks a question, the system searches for relevant information in your vector knowledge base, injects it into the LLM context, and generates a grounded response with verifiable citations. The result: answers that sound natural but are anchored in real data.

Executive Summary

What you need to know to decide.

Enterprise RAG turns your scattered knowledge base (documents, manuals, FAQs, databases) into an AI system that answers questions with 95%+ accuracy and cites the source. The most immediate use case: customer support with a 50% reduction in L1 tickets.

Typical investment: EUR 45,000-500,000+ depending on complexity and data volume. ROI in 4-8 months for support teams of 10+ people. The primary risk (hallucinations) is mitigated with continuous evaluation and human-in-the-loop for critical decisions.

Technical Summary for CTO

Architecture and implementation details.

Modular architecture: ingestion -> chunking -> embedding -> vectorstore -> retrieval -> reranking -> generation. Each component is interchangeable. Embeddings: OpenAI ada-002, Cohere embed-v3, or open-source models (BGE, E5). Vectorstores: Pinecone (managed), Qdrant (self-hosted), pgvector (native PostgreSQL).

Evaluation with the RAGAS framework: faithfulness, answer relevance, context precision, context recall. CI/CD pipeline for accuracy regression testing. Monitoring of cost per query, P95 latency, and embedding drift. European servers for GDPR compliance.

Is It Right for You?

Enterprise RAG makes sense when you have valuable data that nobody leverages.

Who it's for

- Companies with extensive knowledge bases (manuals, technical docs, FAQs, regulations).

- Support teams that answer the same questions repeatedly with scattered information.

- Organizations that need AI grounded in proprietary data without sending sensitive information to public models.

- Legal, compliance, or medical departments that need precise, cited answers.

- Companies that want an intelligent internal search engine that understands natural language.

Who it's not for

- Organizations with little documentation or low-quality unstructured data.

- If you need creative generative AI (campaigns, content) not anchored in proprietary data.

- Companies without budget to maintain and update the knowledge base.

- Use cases where a traditional keyword search is sufficient.

- If your data isn't digitized yet: you first need to digitize your knowledge.

5 Enterprise RAG Use Cases

Where RAG delivers the highest impact.

Intelligent Customer Support

A chatbot that answers customer queries by searching your knowledge base in real time. Reduces L1 tickets by 50%, responds in seconds, and escalates to a human when confidence is low. With conversation history and a feedback loop for continuous improvement.

Internal Knowledge Assistant

Employees ask in natural language and get answers from internal documentation, policies, and procedures. Reduces time spent searching for information by 40%. Especially valuable for onboarding new hires and distributed teams.

Document Processing

Extracts information from contracts, invoices, reports, and legal documents automatically. Classifies, summarizes, and answers questions about thousands of documents in seconds. Ideal for legal, compliance, and finance departments.

Enterprise Semantic Search

Replaces keyword search with semantic search that understands intent. "What is the process for returning a defective product?" instead of searching "return defect". Connects with Confluence, SharePoint, Notion, and internal systems.

Sales Assistant with Product Data

Sales teams query specs, comparisons, and pitch decks in natural language. Generates personalized proposals based on actual product data sheets and client history. 30% reduction in offer preparation time.

Implementation Process

From raw data to a production RAG system.

Data Audit and Design

We evaluate your data sources (documents, databases, APIs), define the chunking and embedding strategy, and design the RAG architecture. Deliverable: technical document with the complete pipeline.

Ingestion and Vectorization

We connect data sources, process documents, and create the vector database. Chunking optimization (size, overlap, metadata). Retrieval testing with real queries from your business.

Orchestration and Evaluation

We build the complete pipeline: retrieval -> reranking -> generation with citation. Evaluation with RAGAS (faithfulness, relevance, precision). Tuning until quality thresholds are met.

Interface, Deployment, and Monitoring

Frontend (chatbot or search), production deployment, and continuous monitoring: accuracy, latency, costs, and user feedback. 30-day post-launch support included.

Risks and Mitigation

Full transparency about RAG challenges.

Hallucinations and incorrect answers

Continuous evaluation with RAGAS (faithfulness >0.9). Mandatory source citation. Confidence thresholds: if the system isn't sure, it says so explicitly instead of inventing an answer.

Privacy and sensitive data

Processing on European servers (GDPR). On-premise or private cloud deployment options. Granular role-based access: each user sees only what their profile permits.

Scalability with millions of documents

Vectorstores designed to scale: Pinecone supports billions of vectors, Qdrant scales horizontally. Incremental indexing for new documents without reprocessing everything.

Growing API and embedding costs

Per-query budgets with alerts. Semantic cache for repeated queries (-60% costs). More efficient embedding models for high volumes. On-premise open-source model option for fixed cost.

Real-World AI and Data Integration Experience

We've been integrating systems and data for 15+ years for European companies. Since 2023, we've deployed production RAG solutions for clients with knowledge bases spanning thousands of documents. We're not a research lab: we build systems that work in the real world with real data and GDPR compliance.

Frequently Asked Questions

What our clients ask about RAG.

What is RAG and why does my company need it?

RAG (Retrieval-Augmented Generation) is an architecture that connects your data with generative AI. Instead of the LLM "inventing" answers, it searches for relevant information in your documents and generates responses anchored in real data. Your company needs it if you have valuable knowledge scattered across documents that nobody queries efficiently.

How are hallucinations eliminated?

Three layers of protection: 1) Grounding: the LLM only generates answers based on retrieved documents. 2) Mandatory citation: every answer includes the source and the exact fragment. 3) Confidence thresholds: if relevance is low, the system responds "I don't have enough information" instead of making something up.

How much does it cost to implement a RAG system?

Basic project (1 data source, simple chatbot): EUR 45,000-80,000. Mid-tier project (multiple sources, semantic search, evaluation): EUR 80,000-200,000. Enterprise project (multi-tenant, on-premise, complex integrations): EUR 200,000-500,000+. Always with a detailed proposal and estimated ROI.

How long does implementation take?

A functional RAG system in production: 6-10 weeks. Includes data audit, ingestion, vectorization, retrieval pipeline, evaluation, interface, and deployment. Enterprise projects with multiple integrations: 12-16 weeks. A functional prototype is available by week 4.

Is it secure? Is my data protected?

Yes. Processing on European servers with full GDPR compliance. Deployment options: private cloud, on-premise, or hybrid. Your data is never used to train third-party models. Granular role-based access and complete query audit trail.

What document formats are supported?

Virtually all of them: PDF, Word, Excel, PowerPoint, HTML, Markdown, Confluence, SharePoint, Notion, Google Docs, SQL databases, REST APIs, and plain text. We use Unstructured.io for advanced processing of complex documents with tables, images, and irregular layouts.

Does it update automatically when data changes?

Yes. We configure incremental ingestion: when a document is added or modified, it's reprocessed and updated in the vector database automatically. Options: webhooks (real-time), cron jobs (periodic), or manual trigger. No need to re-index the entire database.

Can I use open-source models instead of OpenAI?

Absolutely. Our architecture is model-agnostic. You can use Llama 3, Mistral, Mixtral, or any HuggingFace model deployed on your own infrastructure. This eliminates vendor dependency and reduces per-query cost to virtually zero (infrastructure only). Ideal for highly sensitive data that cannot leave your network.

How Much Knowledge Is Your Company Losing Every Day?

Free knowledge base audit. We evaluate your data sources, estimate the impact of a RAG system, and design the architecture. No commitment.

Request RAG audit Technical

Initial Audit.

AI, security and performance. Diagnosis with phased proposal.

Your first meeting is with a Solutions Architect, not a salesperson.

Request diagnosis